A.I. Frenzy Complicates Efforts to Keep Power-Hungry Data Sites Green

West Texas, from the oil rigs of the Permian Basin to the wind turbines twirling above the High Plains, has long been a magnet for companies seeking fortunes in energy.

Now, those arid ranch lands are offering a new moneymaking opportunity: data centers.

Lancium, an energy and data center management firm setting up shop in Fort Stockton and Abilene, is one of many companies around the country betting that building data centers close to generating sites will allow them to tap into underused clean power.

“It’s a land grab,” said Lancium’s president, Ali Fenn.

In the past, companies built data centers close to internet users, to better meet consumer requests, like streaming a show on Netflix or playing a video game hosted in the cloud. But the growth of artificial intelligence requires huge data centers to train the evolving large-language models, making proximity to users less necessary.

But as more of these sites start to pop up across the United States, there are new questions on whether they can meet the demand while still operating sustainably. The carbon footprint from the construction of the centers and the racks of expensive computer equipment is substantial in itself, and their power needs have grown considerably.

Just a decade ago, data centers drew 10 megawatts of power, but 100 megawatts is common today. The Uptime Institute, an industry advisory group, has identified 10 supersize cloud computing campuses across North America with an average size of 621 megawatts.

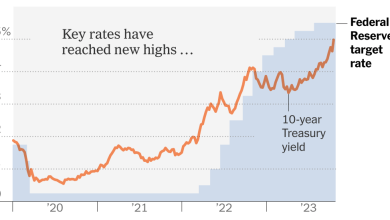

This growth in electricity demand comes as manufacturing in the United States is the highest in the past half-century, and the power grid is becoming increasingly strained.